AI Inference Market Report Scope & Overview:

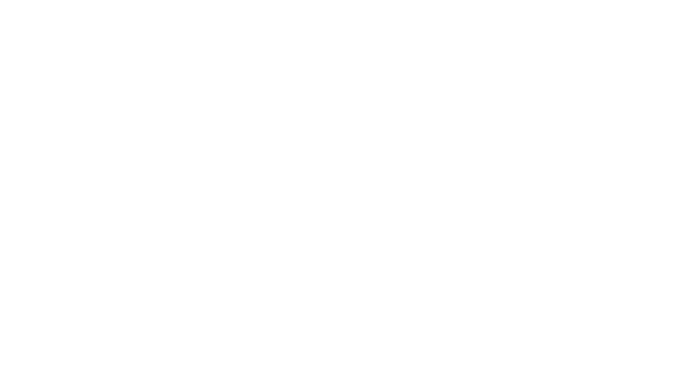

The AI Inference Market Size was valued at USD 87.56 Billion in 2024 and is expected to reach USD 349.49 Billion by 2032 and grow at a CAGR of 18.91% over the forecast period 2025-2032.

The Growth of the AI Inference Market is primarily driven by the increased demand for near real-time processing and low-latency AI applications across segments like healthcare, automotive, finance, retail, and many. As more organizations scale up Generative AI, natural language processing (NLP), and computer vision solutions there is a higher demand for powerful inference that enables accurate and quicker outputs to drive results. With enhanced computing via advancements on the GPU, NPU, and high-bandwidth memory (HBM) front, enterprises are now equipped with the necessary building blocks for scaling out solutions to even complex, intensive AI workloads. In addition, the increasing penetration of cloud-based AI infrastructure and AI integration in IoT and edge devices is strengthening adoption in the market. According to study, AI-powered diagnostics are predicted to handle over 1 billion patient scans annually,

To Get More Information On AI Inference Market - Request Free Sample Report

AI Inference Market Trends

-

Rising demand for real-time, low-latency AI decision-making across industries.

-

Adoption of GPUs, NPUs, and specialized accelerators to boost inference speed and accuracy.

-

Expansion of generative AI assistants requiring seamless, fast conversational responses.

-

Increasing integration of inference capabilities into edge devices such as smartphones, IoT sensors, and smart cameras.

-

Growing focus on privacy, security, and reduced bandwidth costs through local AI processing.

-

Sector-specific applications like healthcare wearables, predictive maintenance in manufacturing, and AI-enabled retail experiences driving adoption.

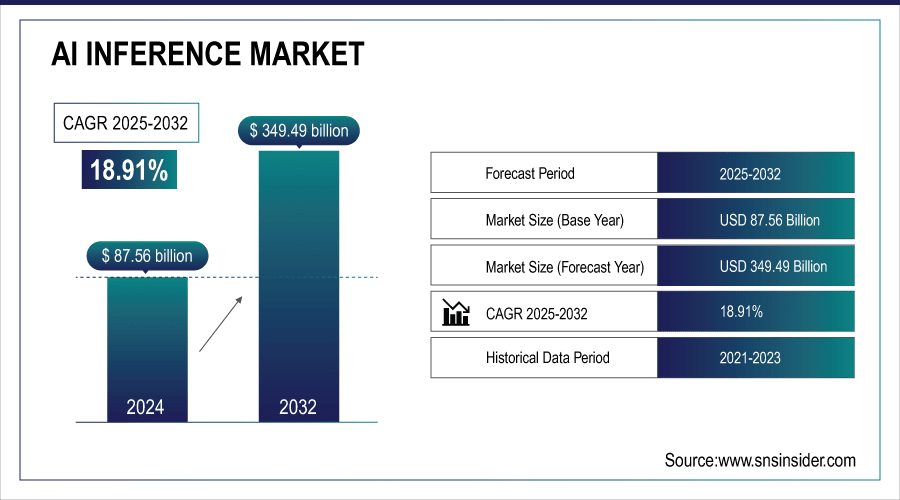

The U.S. AI Inference Market size was USD 21.84 Billion in 2024 and is expected to reach USD 85.80 Billion by 2032, growing at a CAGR of 18.68% over the forecast period of 2025-2032. driven by its robust blend of technological innovation, private and federal investment, and dynamic ecosystem growth.

AI Inference Market Segment Analysis

-

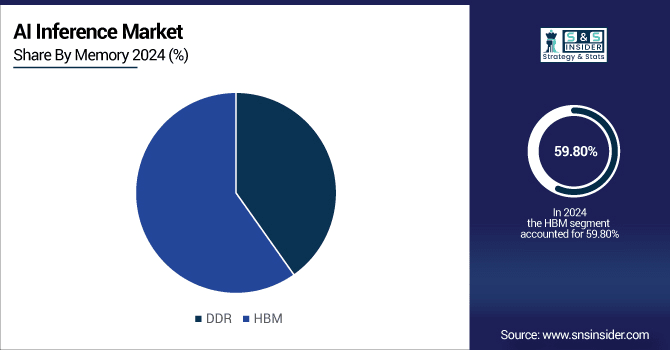

By Memory, HBM dominated with about 59.80% share in 2024, whereas DDR is witnessing the fastest growth at a CAGR of 18.64%.

-

By Compute, GPU accounted for around 45.08% of the market in 2024, while NPU emerged as the fastest-growing segment with a CAGR of 21.77%.

-

By Deployment, Cloud led the market with nearly 50.06% share in 2024, while Edge are projected to grow fastest with a CAGR of 19.51%.

-

By Application, the Machine Learning held the largest share at 30.04% in 2024, with Generative AI expected to be the fastest-growing vertical at a CAGR of 19.72%.

By Memory, HBM Dominates Market While DDR Segment Grows Fastest

High-Bandwidth Memory (HBM) occupies majority of AI Inference Market share as it provides high data throughput to memory-demanding AI tasks including GPUs and Data Center Accelerators. This single solution enables enterprise AI infrastructure with the ability to scale HBM for large-scale deep learning workloads and real-time analytics. By contrast, the fastest growing type of memory is DDR memory, thanks to its low price and adoption in processors for edge devices, mobile platforms and consumer electronics. DDR provides a low-cost AI inference solution for moderate compute and smaller data edge applications, driving rapid scale-out of AI at the edge.

By Compute, GPU Leads Market While NPU Segment Witnesses Fastest Growth

In 2024 GPU inference platforms are leading in the AI Inference Market, easily used to tackle high-performance parallel processing making them an exciting choice for sophisticated AI workloads including machine learning, computer vision and generative AI workloads. With scalable GPUs with high memory bandwidth and proficient software, GPUs have found a home in the enterprise, seeing extensive industry use cases ranging from healthcare to automotive to finance. Meanwhile, NPU segment is also one of the fastest-growing segments owing to the growth of edge AI applications, smartphones and IoT devices. NPUs facilitate efficient, application-specific computation, orchestrate AI workloads, enable low-latency on-device inference, and drive real-time determinations.

By Deployment, Cloud Leads Market While Edge Segment Exhibits Fastest Growth

Cloud deployment owns the major part of AI Inference Market share in 2024, due to scalability, centralized management, and integration with other enterprise AI applications. Cloud-based inference platforms enable organizations to deploy massive AI models with limited upfront infrastructure investment, incorporating them into big data environments for domains such as finance, healthcare, and retail. On the contrary, growth in the edge segment is expected to be rapid, owing to rising needs for real-time, low-latency inference in smartphones, IoT sensors, autonomous vehicles, and smart cameras. Deploying on the edge minimizes bandwidth consumption, improves confidentiality, and accelerates local response decisions.

By Application, Machine Learning Holds Largest Share While Generative AI Segment Grows Fastest

Machine Learning (ML) continues to be the largest application segment in the AI Inference Market, owing to extensive adoption of predictive analytics, recommendation engines, and process automation across industries. ML models can be extremely flexible and are consideredan important component of most enterprise AI solutions, which is whyMLs have the lion's share of the AI market. On the other hand, Generative AI is the fastest-growing category of application based on the surge in content generation, the adoption of AI assistants like ChatGPT, and creative automation solutions. The continued adoption of generative AI in creative domains, enterprise automation, and virtual assistants is fueling demand for high-performance inference infrastructure as the AI market continues to record rising momentum.

AI Inference Market Growth Drivers:

-

Rising Demand for Real-Time AI Processing Across Industries

The demand for low-latency, real-time AI decision-making in healthcare, automotive, finance, and retail is the major factor driving the growth of the AI Inference Market. Inference Systems for Autonomous Driving, Banking Fraud Detection, Personalized Retail Recommendations and AI-based Medical Diagnostics need to give results in milliseconds. Self-driving cars, for example, need to make split-second decisions based on thousands of sensor inputs every second. Likewise, fast inference is also essential for generative AI assistants to keep the conversation flowing naturally. The need for instant answers to business-relevant questions is leading toward greater reliance on GPUs, NPUs, and edge inference devices that offer scale with speed and accuracy.

A single autonomous vehicle can generate over 4 terabytes of data daily, requiring inference systems that process thousands of sensor inputs per second with response times under 10 milliseconds.,

AI Inference Market Restraints:

-

High Hardware and Infrastructure Costs Hindering Adoption

Despite rapid growth, the AI Inference Market faces challenges due to the high cost of purpose-built hardware and large-scale infrastructure. GPUs or NPUs along with HBM (High Bandwidth Memory) solutions are very powerful but also very cost prohibitive and difficult to deploy and maintain. Enterprises need to invest billions of dollars in building AI inference pipelines in data canters, and of course energy and cooling cost. Small and medium-sized businesses are still missing from the inference adoption scene because the hardware is just not affordable to deploy! Finally, maximizing inference workloads requires cost-optimized software stacks, domain expertise, and legacy system integrations, which create further hurdles. These cost and complexity hurdles can delay adoption in sensitive markets.

AI Inference Market Opportunities:

-

Edge AI Integration in Consumer and Industrial Devices

The integration of inference capabilities into edge devices—smartphones, IoT sensors, autonomous robots, smart cameras—is one of the greatest opportunities for the AI Inference Market. This provides organizations with the ability to make fewer cloud-only inference model dependencies and more importantly localized data processing for faster decisions, less latency, lower bandwidth cost, and better privacy and security. Wearables with AI inference in healthcare enable real-time health monitoring; edge-based AI in manufacturing can help predictive maintenance; and AI-enabled cameras in retail can offer instant personalized shopping experiences. An ever-growing ecosystem of edge inference is unlocking enormous growth opportunities for technologists from different sectors.

The upto 20% improvement in equipment uptime underscores the tangible operational gains that come from real-time AI inference—particularly in industrial contexts where milliseconds count.

AI Inference Market Regional Analysis

North America Dominates AI Inference Market with Advanced Technology and Early Adoption:

The AI Inference Market has dominated the North American region owing to the presence of large technology companies, leading semiconductor manufacturing and an established AI research ecosystem. In particular, the U.S. is a center of gravity for AI innovation, with leaders in AI silicon, cloud, and enterprise AI applications. Powerful investments in AI infrastructure specifically GPUs, NPUs, and data centers optimized for inference workloads correlate to that in the region. Early adoption of AI solutions in major markets such as healthcare, automotive, finance, and retail in North America, where low-latency and real-time AI processing is critical, is another factor reinforcing its dominance.

Get Customized Report as Per Your Business Requirement - Enquiry Now

U.S. Leads AI Inference Market with Policy Support and Technological Adoption:

In North America, the U.S. is a clear leader, with significant government support CHIPS and Science Act for domestic semiconductors and AI infrastructure. From healthcare to automotive, finance, and even retail, enterprises are moving to adopt GPUs, NPUs, and edge AI devices now. Further, data center improvements, cloud platforms, and academia–industry collaborations also solidify adoption and innovation.

Asia Pacific Emerges as Fastest-Growing AI Inference Market Driven by Industrialization and Technology Adoption:

Expanding adoption of A I technologies in countries like China, Japan, South Korea and India is leading to A I inference turning to be the fastest growing market within the Asia Pacific region. The increased rate of industrialization combined with the expansion of smart manufacturing, rising investments in AI-based consumer electronics, along with the surging demand for cloud as well as edge AI infrastructure is driving the market growth. Support from the government for AI research and innovation, together with an abundance of human resources, are further accelerating the adoption of inference systems in various fields. With the rising demand for AI solutions and growing technological abilities, Asia Pacific, is set to emerged as a high-growth market for AI inference in the next few years.

China and South Korea Drive Rapid Growth in Asia Pacific AI Inference Market:

Asia Pacific’s growth is fueled by China’s large-scale AI adoption in smart manufacturing, autonomous vehicles, and cloud platforms. South Korea leverages advanced semiconductor and robotics technologies, while India focuses on IT, healthcare, and fintech AI applications.

Europe Strengthens AI Inference Market through Industrial Automation and Collaborative Initiatives

Europe is a lucrative region for AI Inference Market and countries like Germany, France and the UK are prominent here. Rapid investments in AI research and development, industrial automation, and edge computing solutions in manufacturing, health care, financial services, automotive, are driving the growth of the market. Such collaborative efforts among governments, research institutes and private enterprises, as well as regulatory support for AI deployment are solidifying Europe’s market position while promoting gradual cloud and edge AI adoption.

Germany Leads Europe in AI Inference Market with Industry 4.0 and Smart Manufacturing Initiatives

Germany focuses on Industry 4.0 and smart manufacturing, integrating AI inference into robotics, predictive maintenance, and industrial automation. Government initiatives support AI research and deployment.

Latin America and MEA Witness Steady AI Inference Market Growth Driven by Digital Transformation and Smart Initiatives

AI Inference Market in Latin America and Middle East & Africa continues with gradual growth, driven by its increasing adoption in finance, healthcare, retail, and other industrial sectors. The leading stakeholders, listed in order of total revenue contribution, are Brazil, Mexico, Argentina, UAE, Saudi Arabia, and South Africa for cloud AI platforms, edge AI devices, and predictive analytics. The adoption has increased due to rapid government initiatives, smart city projects, and digital transformation strategies allowing for quicker decision making, process optimization, and improved customer experiences ultimately leading towards long term opportunities for Artificial Intelligence solution providers in these emerging regions.

AI Inference Market Competitive Landscape

NVIDIA continues to lead the AI inference market with high-performance GPU and AI solutions, supporting generative AI, reasoning, and real-time inference for trillion-parameter models. Its platforms strengthen enterprise and cloud AI adoption globally.

-

In 2024, NVIDIA unveiled the H200 AI chip and Blackwell platform to enhance large-scale AI inference and generative AI capabilities.

Intel is advancing AI inference with high-performance chips targeting large language model training and enterprise AI workloads, strengthening its position in cloud and edge AI solutions.

-

In April 2024, Intel launched the Gaudi 3 AI chip and Jaguar Shores processor to accelerate AI model training and inference efficiency.

AMD is expanding its AI portfolio to support advanced inference workloads across data centers, edge devices, and enterprise applications with energy-efficient and high-accuracy processors.

-

In 2024, AMD introduced the MI325X AI accelerator and Ryzen AI 300 Series processors featuring XDNA 2 architecture-based NPUs for enhanced AI performance.

AI Inference Market Key Players:

-

NVIDIA

-

Intel

-

AMD

-

Google (TPU)

-

Huawei

-

Alibaba (MetaX)

-

Cambricon Technologies

-

Positron

-

MediaTek

-

Inspur Systems

-

Dell Technologies

-

Hewlett Packard Enterprise (HPE)

-

IBM

-

GigaByte Technology

-

H3C Technologies

-

Lambda Labs

-

Qualcomm

-

Xilinx

| Report Attributes | Details |

|---|---|

| Market Size in 2024 | USD 87.56 Billion |

| Market Size by 2032 | USD 349.49 Billion |

| CAGR | CAGR of 18.91% From 2025 to 2032 |

| Base Year | 2024 |

| Forecast Period | 2025-2032 |

| Historical Data | 2021-2023 |

| Report Scope & Coverage | Market Size, Segments Analysis, Competitive Landscape, Regional Analysis, DROC & SWOT Analysis, Forecast Outlook |

| Key Segments | •By Compute (GPU, CPU, FPGA, NPU, Others) •By Memory (DDR, HBM) •By Deployment (Cloud, On-Premise, Edge) •By Application (Generative AI, Machine Learning, Natural Language Processing, Computer Vision) |

| Regional Analysis/Coverage | North America (US, Canada), Europe (Germany, UK, France, Italy, Spain, Russia, Poland, Rest of Europe), Asia Pacific (China, India, Japan, South Korea, Australia, ASEAN Countries, Rest of Asia Pacific), Middle East & Africa (UAE, Saudi Arabia, Qatar, South Africa, Rest of Middle East & Africa), Latin America (Brazil, Argentina, Mexico, Colombia, Rest of Latin America). |

| Company Profiles | NVIDIA, Intel, AMD, Google (TPU), Broadcom, Huawei, Alibaba (MetaX), Cambricon Technologies, Positron, MediaTek, Inspur Systems, Dell Technologies, Hewlett Packard Enterprise (HPE), Lenovo, IBM, GigaByte Technology, H3C Technologies, Lambda Labs, Qualcomm, Xilinx, and Others. |