AI CHIP Market Size & Overview:

Get More Information on AI Chip Market - Request Sample Report

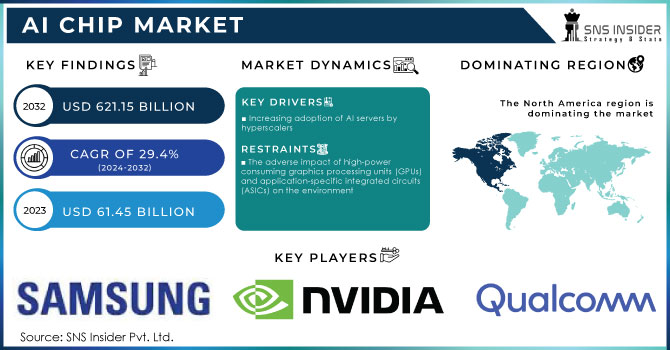

The AI Chip Market Size was valued at USD 61.45 Billion in 2023 and is expected to reach USD 621.15 Billion by 2032 and grow at a CAGR of 29.4% over the forecast period 2024-2032.

The growing consumption of AI servers by hyperscalers and the increasing demand for Generative AI technologies and applications, which include GenAI and AIoT, in BFSI, healthcare, retail & e-commerce, and media & entertainment are driving factors for this market of AI chips. by 2024, AI chips will find their way into the data centers alone, generating $21 billion in revenue this year and growing further at a compound annual rate of nearly 12% to $33 billion by 2028.

The company's fifth annual Global Technology Report, with the market for AI and its hardware continuing to expand, indicates that it will continue growing at 40-55% a year and lead to revenues of between US$780bn to US$990bn. The report looks at AI and its sweeping impact on industry structure, enterprise value, data centers, and business opportunities, among other areas.

AI servers host AI chips to leverage high-speed parallel processing to achieve high-performance efficiency and to effectively handle AI workloads in the cloud data center ecosystem. Other factors include the rising adoption of edge AI computing along with a focus on real-time data processing and strong government-led investments in AI infrastructure development predominantly in the Asia Pacific economies, contributing to the growth of the AI chip market.

AI Chip Market Dynamics

Key Drivers:

-

Increasing adoption of AI servers by hyperscalers

BFSI, healthcare, retail, media, and automotive among others, increased their deployment of AI servers; data centers and cloud providers upgraded infrastructures to support the growing applications of AI. AI server penetration was at 8.8% in 2023 and is expected to increase to 32% by 2030, mainly because of improved use of chatbots, AI of Things, predictive analytics, and NLPs. These all require strong servers to accommodate massive computations and data required in training the AI model.

A recent article stated that hyperscalers such as AWS, Google Cloud, and Microsoft Azure are investing heavily in the area of AI technologies to address this demand. These companies are pushing AI into their cloud platforms so more enterprises can harness the power of next-generation machine learning capabilities and high-performance infrastructure. Cloud spending continues to grow as enterprises need scalable AI-infused solutions. The investment by hyperscalers will fuel an even greater demand for AI servers and chips, furthering the power of AI for all industries. This integrates the original content from the article with other unique insights on hyperscalers investing in AI to fuel their growth in cloud infrastructure.

Restrain:

-

The adverse impact of high-power consuming graphics processing units (GPUs) and application-specific integrated circuits (ASICs) on the environment

Data centers and other infrastructure supporting AI workloads use GPUs and ASICs with parallel processing features. This makes them suitable for handling complex AI workloads; however, parallel processing in GPUs results in high power consumption. This increases energy costs for data centers and organizations deploying AI infrastructure. AI systems can handle large-scale AI operations; however, they also consume significant power to carry out these functions.

Datacenter processor shipments for AI acceleration were growing strongly in 2023, and this trend is expected to continue in 2024 and 2025. Yole Group expects the datacenter GPU market to grow to US$162 billion in 2029, representing an almost 26% CAGR between 2023 and 2029. Among these data center GPUs, the flagship segment with GPUs such as Nvidia H100, B100, and AMD MI300, which are mainly used for generative AI training and inferences, is expected to grow. AI ASICs are also growing strongly to a US$ 71 billion market in 2029, representing a 35% CAGR.

GPUs and ASICs work in parallel with thousands of cores. This requires immense computational power to carry out advanced AI workloads, including deep learning training and large-scale simulations. Hence, companies adopt network components with higher thermal design power (TDP) values. GPUs with higher TDP are in demand due to their better performance.

Therefore, AI chip manufacturers are focused on developing GPUs with a high TDP range. For example, in August 2022, Intel Corporation (US) launched the Flex140 data center GPU, followed by the Max 1450 GPU in October 2023, both with a TDP rating of around 600 watts compared to their older versions, such as Flex 140 GPU and Flex 170 GPU, both having TDP 150 watts. As data-intensive computing requirements continue to rise, manufacturers are developing chips with high processing power. However, the high energy consumption of GPUs and ASICs raises concerns about the environmental impact, particularly in terms of carbon emissions and sustainability. As governments push for greener practices, the environmental footprint of AI hardware could become a critical factor in decision-making, limiting the adoption of high-power-consuming chips.

AI Chip Market - Key Segments

By Chip Type

GPU segment is expected to record the largest market share of 34% during the forecast period. GPUs can effectively handle huge computational loads required to train and run deep learning models using complex matrix multiplications. This makes them vital in data centers and AI research, where the fast growth of AI applications calls for efficient hardware solutions.

New GPUs, which enhance AI capabilities not only for data centers but also at the edge, are constantly developed and released by major manufacturers such as NVIDIA Corporation (US), Intel Corporation (US), and Advanced Micro Devices, Inc. (US). For example, in November 2023, NVIDIA Corporation released an upgraded HGX H200 platform based on Hopper architecture featuring the H200 Tensor core GPU. The first GPU to pack HBM3e memory provides 141 GB of memory at a blazing speed of 4.8 terabytes per second.

Leading cloud service providers, including Amazon Web Services, Inc.; Google Cloud; Microsoft Azure; and Oracle Cloud Infrastructure, are committed to deploying H200-based GPUs to prove that GPUs are one of the critical components of the cloud computing ecosystem. Improvements in GPU memory capabilities and the growing adoption of highly advanced GPUs by cloud service providers will further accelerate market growth.

By Function

The inference segment dominated the AI chip market in the year 2023, and it is expected to grow at the highest CAGR of 30.38% during the forecast period. Inference utilizes pre-trained AI models and makes predictive or timely decisions based on new data. With the rise of AI, there is an added need for more potent inference capabilities within the data center, as businesses begin to focus more on AI integration in their efforts to speed up production efficiency, customer experience, and innovation requirements.

Data centers are scaling fast with AI capabilities. The demand for both efficiency and performance in inference processing further underscores this regard. Another critical factor supporting the expanding growth of the AI chip market is the progressively growing necessity for more energy-efficient and high-performing inference chips. For example, SEMIFIVE recently released its 14 nm AI Inference SoC Platform developed together with South Korea's Mobilint, Inc. This is an inference-focused platform that comes with a quad-core high-performance 64-bit CPU, PCIe Gen4 interfaces, and LPDDR4 memory channels.

The solution aims at custom AI chips, which include ASICs. Such a chip is made to power data center accelerators, AI vision processors, and big data analytics tools implemented for image and video recognition. Altogether, such tools rely a lot on efficient and scalable inference processing. Developing AI inference SoC platforms sets the foundation for the increased demand for special-purpose solutions for hardware, which will help enhance the work performance of inference workloads inside data centers.

By Technology

The Generative AI technology will most likely hold onto the AI chip market share, which is estimated at 24% in the forecast period. It seeks high-quality content generation models, whether texts, images, or codes.

As Generative AI models are becoming increasingly complex, requirements from data center service providers for AI chips in terms of higher processing capabilities and memory bandwidth will increase. GenAI applications have also penetrated at a considerably high rate across retail & e-commerce, BFSI, healthcare, media & entertainment enterprises, and dynamic applications such as NLP, content generation, and automated design generation and process. With the increasing demand for GenAI solutions in such industries, growth in the AI chip market is anticipated to be strong in the coming years.

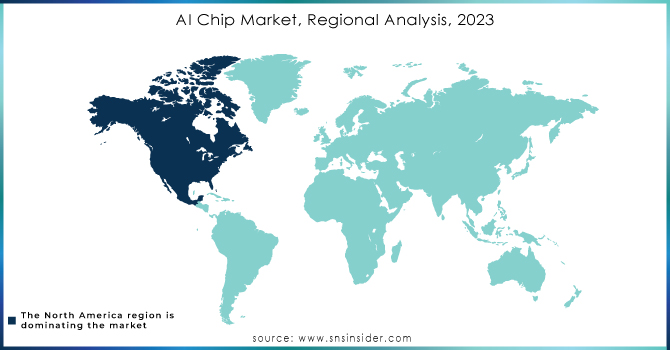

AI Chip Market Regional Analysis

The North American market is expected to grow at a CAGR of 31.78% over the next forecast period. This was mainly because many U.S.-based tech giants are very prevalent in the AI chipsets market and new emerging AI companies in the region. Rising demand for AI technology in the various end-user industries, such as healthcare, automotive, retail, agriculture, manufacturing, marketing, law, and fintech, are significant factors driving the market growth in North America. A large number of people having augmented buying power, continuous investments in infrastructure, and more concentration on having in-house AI applications production will impel the artificial intelligence chipset market growth in the coming years.

The Asia-Pacific dominated the AI Chip Market accounting for a market share of around 33% in 2023. The growing demand for artificial intelligence in end-user industries such as healthcare, manufacturing, and automotive in a country like China, Japan, South Korea, and Australia is said to drive this niche globally. India is likely to contribute the most to the same shortly. For instance, Mumbai-based Yotta Data Services, backed by Hiranandani Group, has already ordered 16,000 GPUs from Nvidia to capitalize on the increasing demand for cloud-based AI development and applications. The APAC market is experiencing growth, mainly because of the falling cost of AI hardware and its increasing adoption to enhance customer services.

Need any customization research on AI Chip Market - Enquiry Now

Key players

Some of the major players in the AI Chip Market are:

-

NVIDIA Corporation (NVIDIA A100 Tensor Core GPU, NVIDIA Jetson AGX Xavier)

-

Intel Corporation (Intel Xeon Scalable Processors with AI Acceleration, Intel Nervana Neural Network Processor)

-

Xilinx Inc. (Versal AI Core, Kria SOMs)

-

Samsung Electronics Co., Ltd. (Exynos AI Processor, Samsung AI Accelerator)

-

Micron Technology (Micron AI Accelerator, Micron AI Memory)

-

Qualcomm Technologies (Snapdragon 8 Series Processors, Qualcomm AI Engine)

-

IBM Corporation (IBM Power Systems with AI, IBM TrueNorth)

-

Google Inc. (Tensor Processing Unit (TPU), Edge TPU)

-

Microsoft Corporation (Azure Machine Learning, Project Brainwave)

-

Apple Inc. (Apple Neural Engine, Apple Silicon)

-

Amazon Web Services (AWS) (AWS Inferentia, AWS Trainium)

-

Advanced Micro Devices, Inc. (AMD Radeon Instinct GPUs, AMD EPYC Processors with AI Acceleration)

-

Graphcore (Graphcore IPU, Graphcore Poplar SDK)

-

General Vision (GV1, GV2)

-

Mellanox Technologies (ConnectX-6, BlueField-2)

-

Huawei Technologies Co. Ltd. (Ascend AI Processor, MindSphere)

-

Fujitsu (Fujitsu A64FX, Fujitsu PRIMEHPC FX100)

-

Wave Computing (Wave Processor, Wave Computing Cloud)

-

Mythic Inc. (Mythic MCU, Mythic AI Platform)

-

Adapteva (Parallel Processing Unit (PPU), Adapteva Epiphany)

-

Koniku (Koniku Labs Neural Processor, Koniku Labs Neuro-AI Platform)

-

Tenstorrent (Tenstorrent Processor, Tenstorrent AI Platform)

Recent Trends

-

In June 2024, Advanced Micro Devices, Inc. (US) released AMD Ryzen AI 300 Series processors with strong NPUs offering 50 TOPS AI-processing power for the next-generation AI PCs. These processors are the first based on the new Zen5 architecture with 12 high-performance CPU cores that offer advanced AI architecture for gaming and productivity.

-

In May 2024, Google introduced its sixth-generation TPU in the US called Trillium that has improved upon training and serving times for AI workloads and an accelerated clock speed. While it also features a bigger matrix multiply units, it is said that Trillium TPU is going to fuel the next wave of AI models.

-

In April 2024Micron Technology, Inc. US) and Silvaco Group, Inc. US) expanded the cooperation to develop an AI-based offering: Fab Technology Co-Optimization FTCO. With FTCO, customers are enabled to perform machine learning software simulations using manufacturing data to create a computer model to simulate the entire wafer fabrication process. Micron Technology, Inc. has invested USD 5 million in the development of FTCO.

| Report Attributes | Details |

|---|---|

| Market Size in 2023 | US$ 61.45 Billion |

| Market Size by 2032 | US$ 621.15 Billion |

| CAGR | CAGR of 29.4 % From 2024 to 2032 |

| Base Year | 2023 |

| Forecast Period | 2024-2032 |

| Historical Data | 2020-2022 |

| Report Scope & Coverage | Market Size, Segments Analysis, Competitive Landscape, Regional Analysis, DROC & SWOT Analysis, Forecast Outlook |

| Key Segments | •By Technology (Generative AI, Machine Learning, Natural Language Processing, Computer Vision) •By Chip Type (CPU, GPU, ASIC, FPGA, Others) •By Function (Training, Inference) •By End-User (Consumer, Data Center, Government Organizations) |

| Regional Analysis/Coverage | North America (US, Canada, Mexico), Europe (Eastern Europe [Poland, Romania, Hungary, Turkey, Rest of Eastern Europe] Western Europe] Germany, France, UK, Italy, Spain, Netherlands, Switzerland, Austria, Rest of Western Europe]), Asia Pacific (China, India, Japan, South Korea, Vietnam, Singapore, Australia, Rest of Asia Pacific), Middle East & Africa (Middle East [UAE, Egypt, Saudi Arabia, Qatar, Rest of Middle East], Africa [Nigeria, South Africa, Rest of Africa], Latin America (Brazil, Argentina, Colombia, Rest of Latin America) |

| Company Profiles | NVIDIA Corporation, Intel Corporation, Xilinx Inc., Samsung Electronics Co., Ltd., Micron Technology, Qualcomm Technologies, IBM Corporation, Google Inc., Microsoft Corporation, Apple Inc., Amazon Web Services (AWS), Advanced Micro Devices, Inc., Graphcore, General Vision, Mellanox Technologies, Huawei Technologies Co. Ltd., Fujitsu, Wave Computing, Mythic Inc., Adapteva |

| Key Drivers | • Increasing adoption of AI servers by hyperscalers |

| Restraints | • The adverse impact of high-power consuming graphics processing units (GPUs) and application-specific integrated circuits (ASICs) on the environment |