HMC and HBM Market Size & Trends:

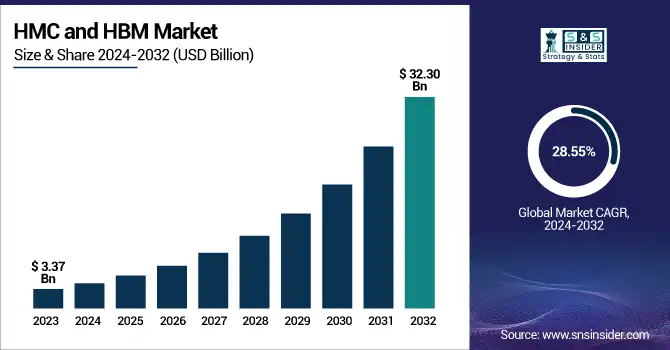

The Hybrid Memory Cube (HMC) and High-bandwidth Memory (HBM) Market was valued at USD 3.37 billion in 2023 and is projected to reach USD 32.30 billion by 2032, growing at a CAGR of 28.55% from 2024 to 2032. The demand is from high-performance computing (HPC), AI workloads, and next-generation data center architectures. Because each stack can achieve performance benchmarking of up to 819 GB/s, HBM3 has been further improved memory bandwidth.

To Get more information on Hybrid Memory Cube (HMC) and High-bandwidth Memory (HBM) Market - Request Free Sample Report

However, TSMC, Samsung and Micron are expanding supply chain, squeezing all other competitors. In the U.S. market, it was valued at USD 0.92 billion in 2023 and is expected to reach USD 7.32 billion by 2032, with a CAGR of 25.85%. The Fast Rise of HBM Adoption — Much of this growth is driven by the adoption of AI accelerators such as the NVIDIA H100, through which HBM3 can augment their performance. Geopolitical tensions are influencing the supply chain, including exporting restrictions on advanced HBM to China. In manufacturing, yield rates keep getting better, causing defect density to be reduced and costs to get lower. All these factors collectively contribute to the growth of the market.

Hybrid Memory Cube and High-bandwidth Memory Market Dynamics:

Drivers:

-

Next-Gen AI and HPC Drive HBM Demand & Cooling Innovations

The rapid advancement of AI and high-performance computing (HPC) is fueling unprecedented demand for High-Bandwidth Memory (HBM), particularly HBM3+, which enables faster data processing with reduced latency. AI accelerators like NVIDIA H100, AMD Instinct MI300, and Intel Gaudi 3 leverage HBM to eliminate bottlenecks in deep learning, while HBM-based GPUs and TPUs are transforming hyperscale data centers. As AI computing power doubles every 10 months, semiconductor giants, including Micron, are expanding HBM production to meet rising demand. Simultaneously, advanced cooling solutions like the Vertiv CoolLoop Trim Cooler optimize energy efficiency, reducing cooling energy consumption by 70% and saving 40% space compared to conventional systems. Designed for next-gen AI factories, these solutions enhance direct-to-chip and immersion cooling. Additionally, AHEAD’s new 10-megawatt AI-HPC facility in Illinois, launching in 2025, underscores the industry’s aggressive push toward AI-driven infrastructure, creating 130 tech jobs and accelerating AI-HPC adoption.

Restraints:

-

Integration Complexity and Architectural Barriers to HMC and HBM Adoption

Hybrid Memory Cube (HMC) and High-Bandwidth Memory (HBM) require specialized memory controllers and interfaces, making them incompatible with traditional DRAM-based systems. Unlike conventional DDR memory, which follows standardized parallel interfaces, HMC and HBM utilize high-speed serial connections and through-silicon vias (TSVs), demanding a significant redesign of memory subsystems and processing units. This architectural shift increases development costs, as companies must invest in new hardware, firmware updates, and software optimization. Additionally, system validation becomes more complex, requiring extensive testing to ensure reliability. The lack of standardized support across computing ecosystems, particularly in data centers, AI accelerators, and HPC applications, further complicates widespread adoption. Many organizations hesitate to transition due to the high cost and engineering effort required, slowing market penetration despite the superior speed, power efficiency, and bandwidth advantages offered by these advanced memory technologies.

Opportunities:

-

HBM Adoption in Hyper scale Data Centers for Next-Gen Cloud Computing

As hyper scale data centers handle increasingly complex workloads, the demand for high-speed, power-efficient memory solutions is rising. High-Bandwidth Memory (HBM) offers significant advantages over traditional DRAM, including higher data transfer rates, lower latency, and reduced power consumption, making it ideal for cloud computing environments. With the exponential growth of AI-driven applications, big data analytics, and real-time processing, cloud service providers are seeking advanced memory technologies to enhance efficiency and scalability. In addition, the 3D-stacked architecture and through-silicon vias (TSVs) of HBM exploit the available space and facilitate heat management, making HBM optimized for servers. Top cloud providers and hyperscalers are set to invest in HBM (high bandwidth memory) powered architectures to speed up machine-learning workloads while also increasing the efficiency of virtualization and lowering their energy bills. As next-generation cloud infrastructure continues to develop, the adoption of HBM is anticipated to grow and lead to breakthroughs in data processing and computational efficiency.

Challenges:

-

Thermal Challenges in 3D-Stacked HBM and HMC Memory Architectures

The 3D-stacked architecture of High-Bandwidth Memory (HBM) and Hybrid Memory Cube (HMC) enables higher data transfer rates but also leads to increased heat generation compared to traditional DRAM. The use of through-silicon vias (TSVs) for vertical data movement creates hotspots within the stacked layers, making effective thermal management crucial. Excessive heat can degrade performance, cause reliability issues, and reduce the lifespan of memory modules. To counteract this, manufacturers are developing advanced cooling solutions, including improved heat spreaders, liquid cooling systems, and thermally optimized packaging materials. Additionally, innovations in chiplet designs and dynamic thermal management techniques are being explored to enhance efficiency. As HBM adoption expands in AI, HPC, and data center applications, addressing these thermal challenges will be critical to ensuring sustained high-performance computing and long-term system stability.

Hybrid Memory Cube (HMC) and High-bandwidth Memory (HBM) Market Segment Analysis:

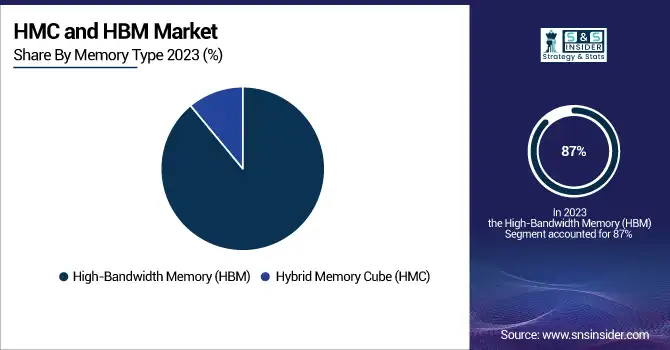

By Memory Type

In 2023, the High-Bandwidth Memory (HBM) segment accounted for approximately 87% of the total market revenue, Designed with superior performance, lower power consumption, and the capability to handle high-speed data transfer, high bandwith memory is ideal for tasks in AI, HPC as well as advanced graphics processing, making it the preferred choice over GDDR for GPU applications. IDRAM features a 3D-stacked architecture, utilizing through-silicon vias (TSVs) that offer superior bandwidth and efficient usage of space compared to conventional DRAM. As workloads for AI, cloud computing, and high data bandwidth applications continue to expand, this further stimulates HBM’s fast uptake. SK Hynix, Samsung, Micron and many major semiconductor factories are improving HBM in all aspects, and it is a necessary part of next-generation computing. Through the development of AI accelerators and data center infrastructure innovations, HBM will continue to lead across future generations as the market evolves towards new workloads and AI-centric processing.

The Hybrid Memory Cube (HMC) segment is projected to experience the fastest growth from 2024 to 2032, due to high-speed data processing and energy efficiency offered by HMC. HMC is fundamentally different than conventional DRAM, as it employs a 3D-stacked architecture complete with through-silicon vias (TSVs) which increases the bandwidth tremendously while reducing overall latency. This makes it well-suited for HPC, AI acceleration, and networking applications. In particular, the automotive, defense, and high-end computing industries will drive adoption, as they require faster and more effective memory solutions. Such HMC advancements are being funded by key stakeholders namely, Micron and Intel to gain better scalability and integration. Although HBM is still dominant, the fact that HMC can manage complex workloads much more efficiently than HBM makes it an essential technology in future computing architectures.

By Product Type

In 2023, the Graphics Processing Unit (GPU) segment held the largest market share, accounting for approximately 45% of total revenue. Due to the need for high-performance computing, artificial intelligence (AI), machine learning, and gaming, the adoption of GPUs is widespread. Demand for AI-driven applications, cloud computing and advanced graphics processing is driving GPU sales. With heavy hitters like NVIDIA, AMD, and Intel not giving up on GPU architecture development, GPU efficiency, power consumption, and data processing speeds will just keep getting better. Market growth has been further supported by the emergence of deep learning, cryptocurrency mining, and real-time rendering in many sectors, such as healthcare and autonomous vehicles. Artificial intelligence GPUs also incorporate High-Bandwidth Memory (HBM), which benefits the transfer of data at high speeds. We can expect similar patterns with GPUs, as AI and gaming industries continue to flourish into the future.

The Accelerated Processing Unit (APU) segment is projected to grow at the fastest rate from 2024 to 2032, owing to the inclination for the conversion of CPU and GPU into a single chip. APUs are great for gaming, AI workloads, and embedded devices; they provide better performance and better energy efficiency. Their compact arrangement also minimizes latency and power requirement, which is a boon for laptops, gaming consoles, and edge compute devices. Growing AI-based applications, real-time data processing, and cost-efficient computing needs are causing an increase in the adoption of APU. Top manufacturers like AMD are constantly working on innovating in processing power and also graphics performance. With sectors demanding both high-powered solutions and energy efficiency, APUs are set to have their moment in computing architectures to come, particularly in consumer electronics, cloud gaming, and AI-related applications.

By Application

In 2023, the graphics segment held the largest revenue share of approximately 35%, due to the growing demand for high-performance visual computing. Market growth was driven by the increasing adoption of graphics processing across gaming, artificial intelligence (AI), virtual reality (VR), and professional visualization. High-end GPUs have turned video game graphics to an interactive movie-like experience (especially true with high-bandwidth memory (HBM) and ray-tracing capabilities, along with AI-driven workloads. Major players such as NVIDIA, AMD, and Intel are pushing forward, enhancing both processing speeds and energy efficiency. And the advent of cloud gaming, 3D rendering and AI-powered image processing has fueled demand even more. Similarly, markets like automotive, healthcare, and entertainment are relying more on high-end graphics solutions for simulation and design applications. The graphics segment is anticipated to continue its dominance in the market owing to its diverse use cases in various industries as the applications of visual compute expand across sectors.

The networking segment is expected to witness significant growth from 2024 to 2032, owing to the rising number of data centers that require high-speed data transfer, cloud computing, and 5G infrastructure. The proliferation of artificial intelligence (AI), edge computing, and the Internet of Things (IoT) is driving higher bandwidth memory requirements in networking hardware. Networking devices are using High-Bandwidth Memory (HBM) and Hybrid Memory Cube (HMC) to speed up data processing and minimize latency. As such, companies are flocking to invest in next-generation network architectures to provide high-performance computing, data centers, and AI-driven workloads. In addition, demand is being driven by the growth in hyper scale data centers and the adoption of software-defined networking (SDN).

HMC and HBM Market Regional Outlook:

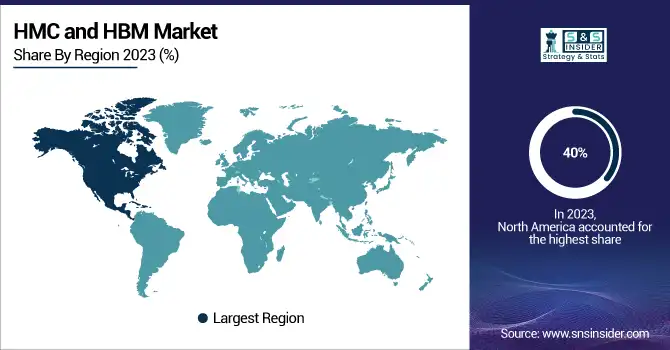

In 2023, North America dominated the Hybrid Memory Cube (HMC) and High-Bandwidth Memory (HBM) market, accounting for approximately 40% of total revenue. This growth was driven by the strong presence of leading semiconductor companies, data centers, and cloud service providers in the region. The rapid adoption of AI, machine learning, and high-performance computing (HPC) has fueled demand for advanced memory solutions, particularly in industries such as IT, automotive, and telecommunications. companies like Google, Amazon, and Microsoft further strengthened market growth. Additionally, rising investments in 5G infrastructure and edge computing have increased the need for high-speed, energy-efficient memory solutions. Government support for semiconductor manufacturing and R&D, along with strategic partnerships among key players, continues to drive innovation and solidify North America’s leading position in the market.

The Asia Pacific region is projected to be the fastest-growing market for Hybrid Memory Cube (HMC) and High-Bandwidth Memory (HBM) from 2024 to 2032. This growth is fueled by the rising demand for AI, high-performance computing (HPC), and 5G applications, particularly in countries like China, Japan, South Korea, and Taiwan. The region is home to major semiconductor manufacturers, including Samsung, SK Hynix, and TSMC, which are investing heavily in next-generation memory technologies. The rapid expansion of hyper scale data centers and cloud computing infrastructure is further driving demand for high-speed, energy-efficient memory solutions. Additionally, government initiatives to boost semiconductor production and advancements in automotive and consumer electronics are accelerating market adoption. With increasing R&D investments and strong industrial growth, Asia Pacific is poised to lead in HBM and HMC adoption in the coming years.

Get Customized Report as per Your Business Requirement - Enquiry Now

Key Players in Hybrid Memory Cube (HMC) and High-bandwidth Memory (HBM) Market are:

-

Micron Technology (USA) – HBM, DRAM, NAND flash memory, Hybrid Memory Cube (HMC)

-

Samsung Electronics (South Korea) – HBM, DRAM, NAND flash, SSDs, AI accelerators

-

SK Hynix (South Korea) – HBM, DRAM, NAND flash, AI memory solutions

-

Advanced Micro Devices (AMD) (USA) – GPUs, APUs, AI accelerators, HBM-enabled processors

-

Intel Corporation (USA) – CPUs, FPGAs, AI chips, Optane memory

-

Xilinx (USA) (now part of AMD) – FPGAs, adaptive computing solutions, AI hardware

-

Fujitsu (Japan) – Supercomputing processors, AI computing solutions

-

NVIDIA (USA) – GPUs, AI accelerators, HBM-enabled processors

-

IBM (USA) – High-performance computing, AI processors

-

Open-Silicon, Inc. (USA) – ASIC design, custom memory solutions

-

Rambus Incorporated (USA) – Memory interface chips, HBM PHY, high-speed memory solutions

List of Suppliers who provide raw material and component for Hybrid Memory Cube (HMC) and High-bandwidth Memory (HBM) Market:

-

Shin-Etsu Chemical Co., Ltd.

-

SUMCO Corporation

-

Amkor Technology

-

ASE Group

-

Siliconware Precision Industries (SPIL)

-

Advantest Corporation

-

Teradyne Inc.

-

BASF SE

-

Dow Inc.

| Report Attributes | Details |

|---|---|

| Market Size in 2023 | USD 3.37 Billion |

| Market Size by 2032 | USD 32.30 Billion |

| CAGR | CAGR of 28.55% From 2024 to 2032 |

| Base Year | 2023 |

| Forecast Period | 2024-2032 |

| Historical Data | 2020-2022 |

| Report Scope & Coverage | Market Size, Segments Analysis, Competitive Landscape, Regional Analysis, DROC & SWOT Analysis, Forecast Outlook |

| Key Segments | • By Memory Type (Hybrid Memory Cube (HMC), High-bandwidth memory (HBM)) • By Product Type(Graphics Processing Unit (GPU), Central Processing Unit (CPU), Accelerated Processing Unit (APU), Field-programmable Gate Array (FPGA), Application-specific Integrated Circuit (ASIC)) • By Application (Graphics, High-performance Computing, Networking, Data Centers) |

| Regional Analysis/Coverage | North America (US, Canada, Mexico), Europe (Eastern Europe [Poland, Romania, Hungary, Turkey, Rest of Eastern Europe] Western Europe] Germany, France, UK, Italy, Spain, Netherlands, Switzerland, Austria, Rest of Western Europe]), Asia Pacific (China, India, Japan, South Korea, Vietnam, Singapore, Australia, Rest of Asia Pacific), Middle East & Africa (Middle East [UAE, Egypt, Saudi Arabia, Qatar, Rest of Middle East], Africa [Nigeria, South Africa, Rest of Africa], Latin America (Brazil, Argentina, Colombia, Rest of Latin America) |

| Company Profiles | Micron Technology (USA), Samsung Electronics (South Korea), SK Hynix (South Korea), Advanced Micro Devices (AMD) (USA), Intel Corporation (USA), Xilinx (USA) (now part of AMD), Fujitsu (Japan), NVIDIA (USA), IBM (USA), Open-Silicon, Inc. (USA), Rambus Incorporated (USA). |